Digital Resistance: Call for papers

Special thematic issue of the Journal of Resistance Studies

Editors: Nora Madison & Mathias Klang

This call as a pdf is available here

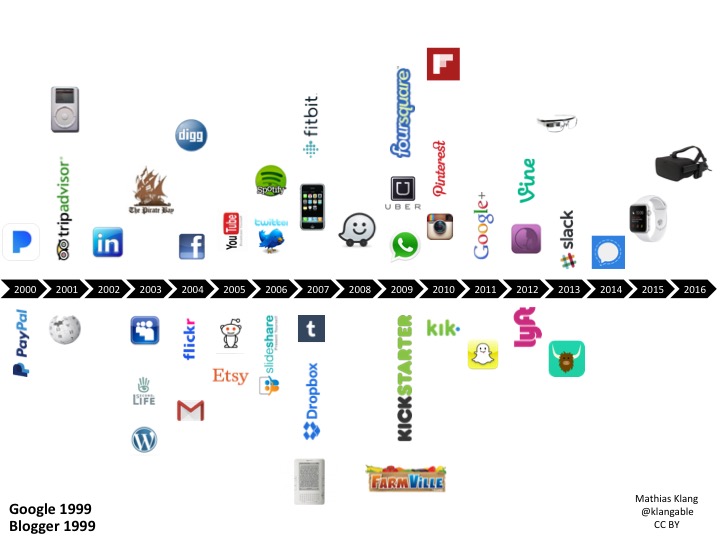

In many spaces, mobile digital devices and social media are ubiquitous. These devices and applications provide the platforms with which we create, share and consume information. Many obtain much of their news and social information via the personal screens we constantly carry with us. It is therefore unsurprising that these devices also become integral to acts of social activism and resistance.

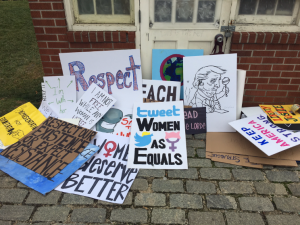

This digital resistance is most visible in the virtual social movements found behind hashtags such as #BlackLivesMatter, #TakeAKnee, and #MeToo. However, it would be an oversimplification to limit digital resistance to its most popular expressions. Video sharing on YouTube, Twitter, and Facebook have revealed abuses of police power, racist attacks, and misogyny. The same type of device is used to both record, share, and view instances of abuse. The devices and platforms are also used to organize and coordinate responses, ranging from online naming and shaming, online protests, physical protests. The devices and the platforms are then used to share the protests and their results. More and more the device and the platform are the keyhole through which resistance must fit.

Our devices and access to platforms enable the creation of self-forming and self-organizing resistance movements capable of sharing alternative discourses in advocating for diverse social agendas. This freedom shapes both the individual’s relationship to both power and resistance, in addition to their identities and awareness as activists. It is somewhat paradoxical that something so central to the activist identity and the performance of resistance is in essence created and run as a privatized surveillance machine.

Digital networked resistance has received a great deal of media attention recently. The research field is developing, but more needs to be understood about the role of technology in the enactment of resistance. Our goal is to explore both the role of digital devices and platforms in the processes of resistance.

This special edition aims to understand the role of technology in enabling and subverting resistance. We seek studies on the use of technology in the acts of protesting official power, as well as the use of technology in contesting power structures inherent in the technology or the technological platforms. Contributions are welcome from different methodological approaches and socio-cultural contexts.

We are looking for contributions addressing resistance, power, and technology. This call is interested in original works addressing, but not limited to:

- Problems with the use of Digital Resistance

- Powerholders capacity to map Digital Resistance-activists through surveillance

- How does Digital Resistance differ and/or function compared with Non-digital Resistance?

- Problems and advantages with combinations of Digital Resistance and non- Digital Resistance?

- Resistance to platforms

- Hashtag activism & hijacking

- Online protests & movements

- The use of humor/memes as resistance

- Selfies as resistance

- Globalization of resistance memes

- Ethical implications of digital resistance

- Online ethnography (testimonials/narratives provided by online participants)

- Issues concerning, privacy, surveillance, anonymity, and intellectual property

- Effective rhetorical strategies and aesthetics employed in digital resistance

- Digital resistance: Research methods and challenges

- The role of technology activism in shaping resistance and political agency

- Shaping the digital protest identity

- Policing digital activism

- Digital resistance as culture

- Virtual resistance communities

- The affordances and limitations of the technological tools for digital resistance

Abstracts should be 500 – 750 words (references not included).

Send abstracts to noramadison@gmail.com

Important Dates

Abstracts by 15 January 2019

Notification of acceptance 15 February 2019

Submission of final papers 1 April 2019

- Max 12000 words (all included)