What is a city? Who gets to decide how it should be used and by which groups? In order to address this I began with two examples intended to demonstrate the conflict. I purposely chose not to use large scale examples.

The first example was in 2009 when the Harvard professor Henry Louis Gates Jr was arrested for breaking into his own home. Despite being able to identify himself and that it was his own address the police “…arrested, handcuffed and banged in a cell for four hours arguably the most highly respected scholar of black history in America.”

The second example was Oscar-winner Forest Whitaker being accused of shoplifting and patted down by an overzealous employee at the Milano Market on the Upper East Side in Manhattan. This latter example is interesting because the market apologized and said of the employee: “He’s a decent man, I’m sure he didn’t mean any by wrong doing, he was just doing his job” “a sincere mistake”. An interesting thing about this is that if you search the term “Forest Whitaker deli” most of the hits are for the apology and not for the action itself.

These two minor events would never had come to the attention of anyone unless they had happened to celebrities with the power to become part of the news. They demonstrate that even among sincere well meaning people there are groups thought to have less access to the city.

Ta-Nehisi Coates wrote an excellent op-ed called The Good, Racist People , which he ends writing about the deli:

The other day I walked past this particular deli. I believe its owners to be good people. I felt ashamed at withholding business for something far beyond the merchant’s reach. I mentioned this to my wife. My wife is not like me. When she was 6, a little white boy called her cousin a nigger, and it has been war ever since. “What if they did that to your son?” she asked.

And right then I knew that I was tired of good people, that I had had all the good people I could take.

Following this introduction the lecture moved on to demonstrate the power of maps. I began with a description of the events leading up to Dr John Snow identifying the Broad Street Pump as the cause for the Soho Cholera outbreak of 1854.

Dr Snow did not believe in the miasma (bad air) theory as the cause of cholera and in order to prove that the cause was connected to the public water pump on broad street he began mapping out the cholera victims on a map. They formed a cluster around the pump.

With the help of this illustration he was able to show that the disease was local and get the pump handle removed. The cholera cases decreased rapidly from that point.

With the help of this illustration he was able to show that the disease was local and get the pump handle removed. The cholera cases decreased rapidly from that point.

The immediate cause of the outbreak was the introduction of human waste into the water system – most probably from a mother washing an infected child’s diapers. But the fundamental reason for the huge death count was the lack of sewer and sanitation systems in this poorer area of the city. By insisting on the miasma theory the city could claim to be free from responsibility.

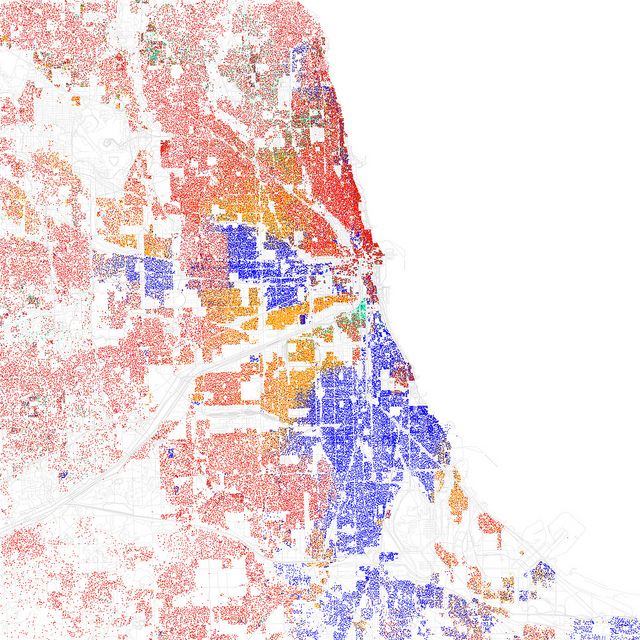

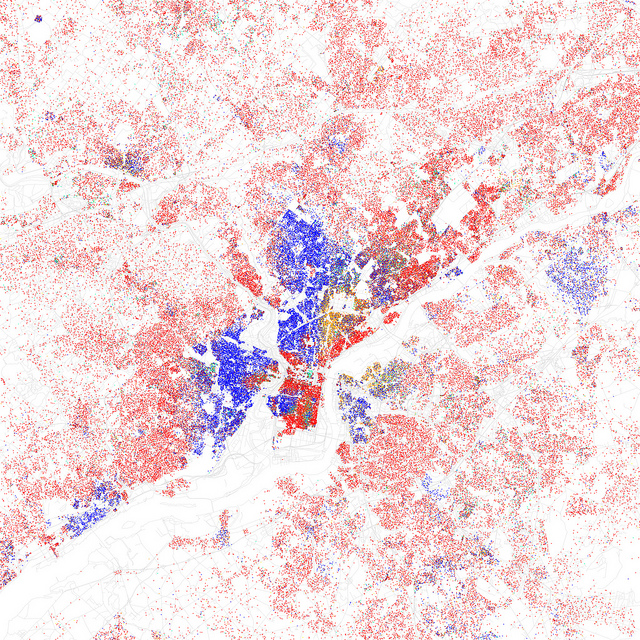

In the following part of the lecture I wanted to discuss how cities can maintain segregation and inequality of services despite the ways in which the rules are presented as fair and non-biased. In order to do this I used a list of maps demonstrating cities segregation by race and ethnicity created by Eric Fischer.

One dot for each 500 residents. Red is White, Blue is Black, Green is Asian, Orange is Hispanic, Yellow is Other. Images are licensed CC BY SA. There are several maps of interest and they are well worth studying. Here I will only present Philadelphia and Chicago:

Chicago: One dot for each 500 residents. Red is White, Blue is Black, Green is Asian, Orange is Hispanic, Yellow is Other.

Philadelphia: One dot for each 500 residents. Red is White, Blue is Black, Green is Asian, Orange is Hispanic, Yellow is Other.

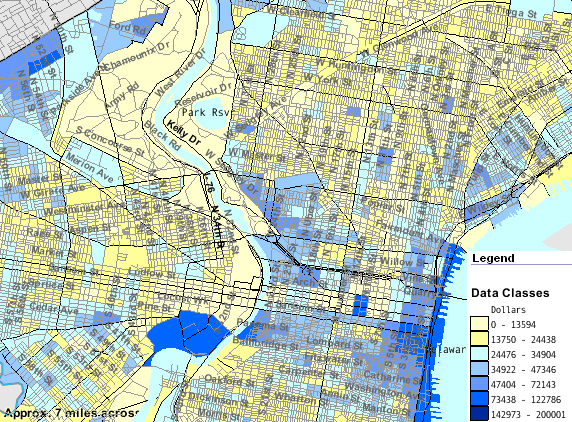

As we are in Philadelphia I also included a map of household income (Demographics of Philadelphia)

At this point I moved the discussion to the distinction between public and private spaces. I used definitions of these from Wikipedia

A public space is a social space that is generally open and accessible to people. Roads (including the pavement), public squares, parks and beaches are typically considered public space.

To a limited extent, government buildings which are open to the public, such as public libraries are public spaces, although they tend to have restricted areas and greater limits upon use.

Although not considered public space, privately owned buildings or property visible from sidewalks and public thoroughfares may affect the public visual landscape, for example, by outdoor advertising.

As the distinctions between private/public will be discussed in depth in a future lecture I left this as a relatively vague discussion and went into the problems of two of our rights as practiced in the “public space”

Free Speech: Not wanting to delve into the theory of this fascinating space I jumped straight into the heart of the discussion with a quote from Salman Rushdie: “What is freedom of expression? Without the freedom to offend, it ceases to exist” The point being that we don’t need protection to conform but we do need it to evolve.

For this lecture I brought up outdoor advertising. This is an activity which is globally dominated by one corporation: The Clear Channel Outdoor Holdings is probably the biggest controller of outdoor communication in the world. They have the ability to decide which messages are transmitted and which are not. They have accepted advertising for fashion brands which transmit harmful body images and even brands which have been accused of glorifying gang rape. For a look at this disturbing trend in advertising see 15 Recent Ads That Glorify Sexual Violence Against Women.

The messages being pushed out on billboards can arguably seen as a one-sided participation of the public debate. Changing messages (adbusting) or even correcting willfully false information on billboards is seen as vandalism. As a demonstration that something can be done I showed a clip of a report about the clean city law, where the city of Sao Paulo has forbidden outdoor advertising.

However, when Baltimore in 2013 attempted to introduce a billboard tax Clear Channel Outdoor argued that billboards should be protected as free speech by the First Amendment and this tax would therefore be a limitation of the corporations human rights.

In order to demonstrate the right of assembly I used the demonstrations at Wall Street where the desire to protest was supported (in theory) by Mayor Bloomberg

“people have a right to protest, and if they want to protest, we’ll be happy to make sure they have locations to do it.”

Despite this sentiment the parks of New York close (even the ones without gates) at dusk or 1 am. This prevents demonstrators staying overnight. In order to circumvent this and continue the protests the demonstrators went to the privately owned Zuccotti Park where they could stay overnight. Eventually the protestors where dispersed when it was argued that the conditions were unsanitary.

The slides I used are here: