What are we doing online? How did we become the sharing group that we are today? And what are the implications of this change? These were the questions that we addressed today in class.

To begin with we began the discussion of what online safety looked like in the early 2000. The basic idea was that you should never put your real name, address, image, age or gender online. Bad things happened if you shared this openly online and the media joyously reported on the horrors of online life.

To begin with we began the discussion of what online safety looked like in the early 2000. The basic idea was that you should never put your real name, address, image, age or gender online. Bad things happened if you shared this openly online and the media joyously reported on the horrors of online life.

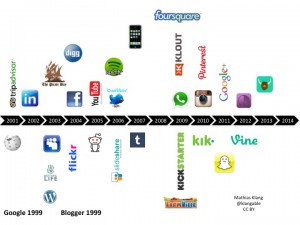

By the time Facebook came along everything changed. Real names and huge amounts of real information became the norm. Then we got cameras on phones (not an inevitable progression) so when we added smartphones to the mix, sharing exploded.

Sherry Turkle was one of the most prominent researchers involved in the early days of Internet life. In 1995 her book Life on the Screen was optimistic about the potential impact of technology and the way we could live our lives online. Following the development of social media, Turkle published a less positive perspective on technology in 2011 called “Alone Together: Why We Expect More from Technology and Less from Each Other”. In this work she is more concerned about the negative impact of internet connected mobile digital devices on our lives.

In a discussion of her work I took some key quotes from her Ted Talk on her Alone Together book.

The illusion of companionship without the demands of friendship…

Being alone feels like a problem that needs to be solved…

I share therefore I am… Before it was; I have a feeling, I want to make a call. Now it’s; I want to have a feeling, I need to send a text…

If we don’t teach our children to be alone, they will only know how to be lonely

The discussions in class around these quotes were ambivalent. Yes, there was a level of recognition in the ways in which technology was being portrayed but there was also a skepticism about the very negative image of technology.

Then there was the fact, that she mentions in her talk, that she was no longer just a young researcher, she was now the mother of teenagers. She looked at their use of technology and despaired. What did this mean? Was there a growing technophobia coming with age? Was her fear and generalization a nostalgic memory of the past that never was?

The Douglas Adams quote from Salmon of Doubt felt appropriate:

Anything that is in the world when you’re born is normal and ordinary and is just a natural part of the way the world works. Anything that’s invented between when you’re fifteen and thirty-five is new and exciting and revolutionary and you can probably get a career in it. Anything invented after you’re thirty-five is against the natural order of things.

So is that what’s happening here? Is it just that technology has moved and to a point where the researcher feels they are “against the natural order of things”? A fruitful discussion was had.

From this point we moved the discussion over to the process of sharing. The ways in which – no matter what you think – technology has changed our behavior. One example of this is the way in which we feel the need to document things that happen around us on a level which we were unable to do before.

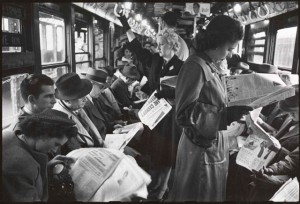

The key question is whether we are changing, and if so, whether technology is driving this change. Of course all our behavior is not a direct result of our technology. For example the claims that we are stuck in our devices and anti-social can be countered with images such as these

The key question is whether we are changing, and if so, whether technology is driving this change. Of course all our behavior is not a direct result of our technology. For example the claims that we are stuck in our devices and anti-social can be countered with images such as these

Commuters on trains were rarely sociable and talkative with each other and therefore they needed a distraction. Newspapers were a practical medium at the time and now they are being replaced by other mediums.

Commuters on trains were rarely sociable and talkative with each other and therefore they needed a distraction. Newspapers were a practical medium at the time and now they are being replaced by other mediums.

However, the key feature about social media may not be what we consume but it’s the fact that we are participating and creating the content (hence the term User generated content).

What we share and how we share has become a huge area of study and parody. The video below is a great example of this. Part of what is interesting is the fact that most who watch it feel a sting of recognition. We are all guilty of sharing in this way.

This sharing has raised concerns about our new lifestyles and where we are headed. One example of this techno-concern (or techno-pessimism) can be seen in the spoken poem Look Up by Gary Turk

Of course this is one point of view and it wouldn’t be social media if this wasn’t met up with another point of view. There are several responses to Look Up, my favorite is “Look Down (Look Up Parody)” by JianHao Tan.

From this point I moved to a discussion on a more specific form of sharing: The Selfie. The first thing to remember is that the selfie is not a new phenomenon. We have been creating selfies since we first learned to paint. Check out the awesome self portrait by Gustave Courbet.

But of course, without our camera phones we would not be able to follow the impulse to photograph ourselves. Without our internet connections we would not have the ability to impulsively share. These things are aided by technology.

But of course, without our camera phones we would not be able to follow the impulse to photograph ourselves. Without our internet connections we would not have the ability to impulsively share. These things are aided by technology.

The Telegraph has an excellent short video introduction to the selfie and includes some of the most famous/infamous examples

In preparation of this class I had asked the students to email me a selfie (this was voluntary) and at this stage I showed them their own pictures (and my own selfie of course). The purpose of this was to situate the discussion of the selfie in their own images and not in an abstract ideology.

We discussed the idea of a selfie aesthetic the way in which the way in which we take pictures is learned and then we learn what is and is not acceptable to share. All this is a process of socialization into the communication of selfies.

Questions we discussed were:

– Why did you take that image?

– Why did you take it that way?

– Why did you share it?

– What was being communicated?

Then we moved to the limits of selfie sharing. What was permissible and not permissible. Naturally, this is all created and controlled in different social circles. We discussed the belfie as one possible outer limit for permissable communication.

But the belfie could be seen as tame compared to the funeral selfie a subgenre which has its own tumblr.

However, the selfie that sparked the most discussion was the Auschwitz Selfie which created a twitter storm when it was fist posted and continues to raise questions of what can and should be communicated and the manner in which it should be communicated.

The whole “selfie as communication” creates new ways of communication and innovation. One such example is the picture of a group of Brazilian politicians purported to be creating a selfie.  This is cool because the politicians want to be current and modern and therefore try to do what everyone is doing. They are following the selfie aesthetic which in itself has become a form of accepted communication online.

This is cool because the politicians want to be current and modern and therefore try to do what everyone is doing. They are following the selfie aesthetic which in itself has become a form of accepted communication online.

Here are the slides I used (I have taken out the student selfies)